Some Notes of the InstructGPT Paper and RLHF

Training language models to follow instructionswith human feedback

Introduction

- use reinforcement learning from human feedback to fine-tune GPT-3 to follow a broad class of written instructions

- InstructGPT models show improvements in truthfulness over GPT-3

- InstructGPT shows small improvements in toxicity over GPT-3, but not bias

- InstructGPT models show promising generalization to instructions outside of the RLHF finetuning distribution

Methods and experimental details

High-level methodology

- Collect demonstration data, and train a supervised policy

- Collect comparison data, and train a reward model

- Optimize a policy against the reward model using PPO

Models

- Supervised fine-tuning (SFT)

- We fine-tune GPT-3 on our labeler demonstrations using supervised learning

- Reward modeling (RM)

- Starting from the SFT model with the final unembedding layer removed, we trained a model to take in a prompt and response, and output a scalar reward

- Reinforcement learning (RL)

- fine-tuned the SFT model on our environment using PPO

Evaluation

- the model should follow instructions, but also infer intention from a few-shot prompt or another interpretable pattern

- Evaluations on API distribution

- human preference ratings on a held out set of prompts from the same source as our training distribution

- Evaluations on public NLP datasets

Discussion

Implications for alignment research

- The cost of increasing model alignment is modest relative to pretraining.

- We’ve seen some evidence that InstructGPT generalizes ‘following instructions’ to settings that we don’t supervise it in

- We were able to mitigate most of the performance degradations introduced by our fine-tuning.

- We’ve validated alignment techniques from research in the real world

Open questions

- Many methods could be tried to further decrease the models’ propensity to generate toxic, biased, or otherwise harmful outputs.

- Getting models to do what we want is directly related to the steerability and controllability literature

- there are many other algorithms that could be used to train policies on our demonstration and comparison data to get even better results

Illustrating Reinforcement Learning from Human Feedback (RLHF)

RLHF: Let’s take it step by step

Pretraining language models

- In general, there is not a clear answer on “which model” is the best for the starting point of RLHF

- the design space of options in RLHF training are not thoroughly explored

Reward model training

- The underlying goal

- get a model or system

- takes in a sequence of text

- returns a scalar reward

- which should numerically represent the human preference

- get a model or system

- The training dataset of prompt-generation pairs

- generated by sampling a set of prompts from a predefined dataset

- OpenAI used prompts submitted by users to the GPT API

- generated by sampling a set of prompts from a predefined dataset

- Human annotators are used to rank the generated text outputs from the LM

- rankings create a much better regularized dataset

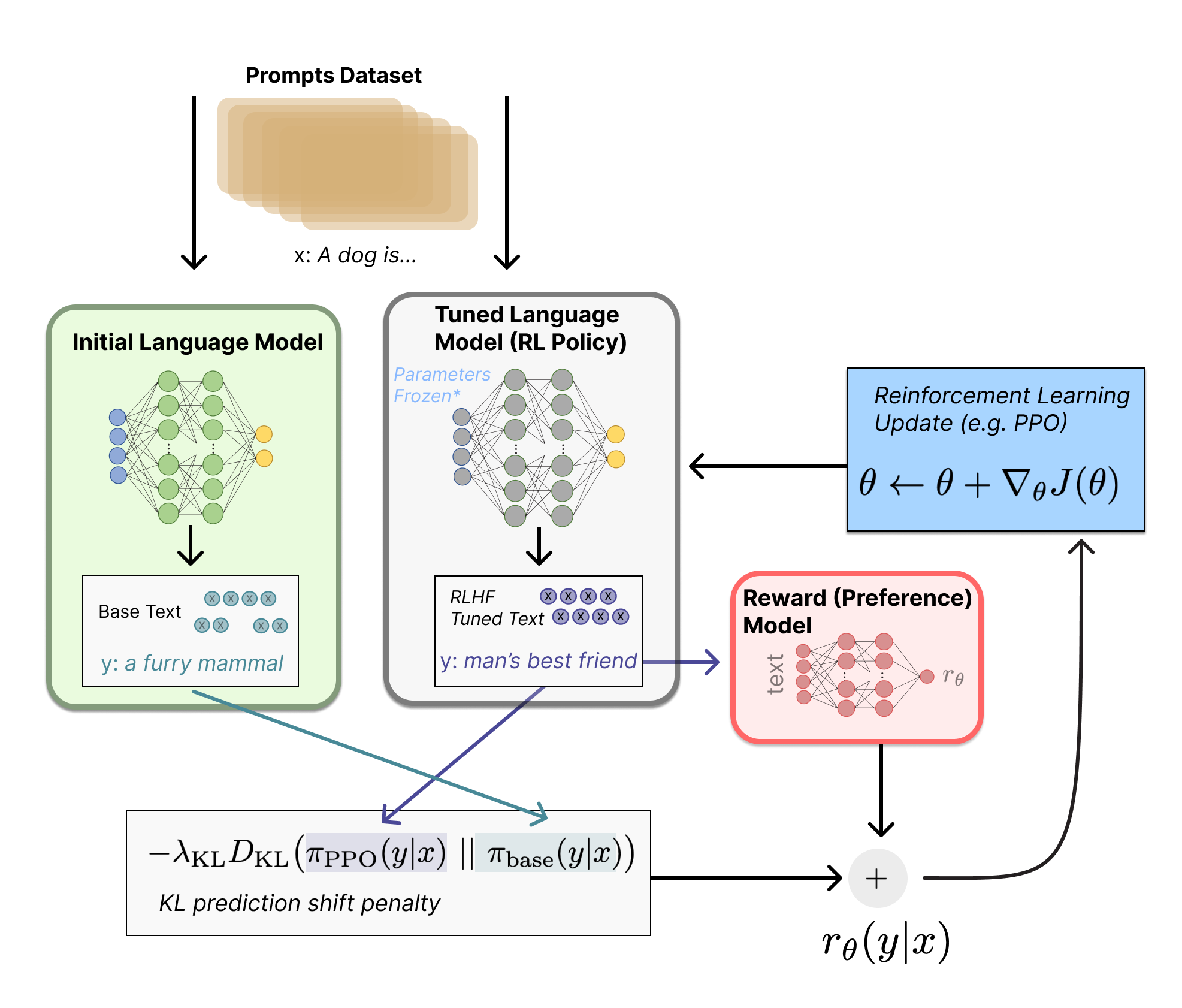

Fine-tuning with RL

- Proximal Policy Optimization (PPO)

- Formulation

- policy

- a language model that takes in a prompt and returns a sequence of text

- action space

- all the tokens corresponding to the vocabulary of the language model

- observation space

- the distribution of possible input token sequences

- reward function

- a combination of the preference model and a constraint on policy shift

- Given a prompt, x , from the dataset, the text y is generated by the current iteration of the fine-tuned policy. Concatenated with the original prompt, that text is passed to the preference model, which returns a scalar notion of “preferability”.

- The KL divergence term penalizes the RL policy from moving substantially away from the initial pretrained model with each training batch

- The final reward sent to the RL update rule is $r = r_{\theta} - \lambda r_{KL}$.

- maximizes the reward metrics

- policy

Open-source tools for RLHF

-

Tools

-

Datasets

- There is a large dataset created by Anthropic available on the Hub.

What’s next for RLHF?

- Generating well-written human text answering specific prompts is very costly

- Human annotators can often disagree

- PPO is a relatively old algorithm, but there are no structural reasons that other algorithms could not offer benefits and permutations on the existing RLHF workflow